Using etched tungsten ditelluride at nearly absolute zero, scientists have observed electrons swirling around like whirlpools, behaving as a fluid. The methods could be used to design low-energy devices. Plus, eavesdropping on aliens, machine learning on solar data, and some new observatories are in the works.

Podcast

Show Notes

Researchers describe tech for eavesdropping on aliens

- Could we eavesdrop on communications that pass through our solar system? (Phys.org)

- “A Search for Radio Technosignatures at the Solar Gravitational Lens Targeting Alpha Centauri,” Nick Tusay et al., to be published in The Astronomical Journal (preprint)

Improving small sat onboard data processing

- CMU press release

JWST Hints at a galaxy-filled future

Machine learning processes solar weather data

- SwRI press release

- “Efficient labelling of solar flux evolution videos by a deep learning model,” Subhamoy Chatterjee, Andrés Muñoz-Jaramillo and Derek A. Lamb, 2922 June 27, Nature Astronomy

Finding dark matter in the equations

- NYU press release

- “Joint Cosmic Microwave Background and Big Bang Nucleosynthesis Constraints on Light Dark Sectors with Dark Radiation,” Cara Giovanetti, Mariangela Lisanti, Hongwan Liu, and Joshua T. Ruderman, 2022 July 6, Physical Review Letters

Electrons swirl like water under specific conditions

- MIT press release

- “Direct observation of vortices in an electron fluid,” A. Aharon-Steinberg et al., 2022 July 6, Nature

What’s Up: Full Moon ahead, planets too

- Full Moon Guide: July – August 2022 (NASA)

- Saturn at opposition, coming up August 14 (EarthSky)

Optical telescopes to help LIGO

- Monash University press release

- University of Warwick press release

Transcript

Pamela, I am not happy.

What’s wrong, Erik?

The full moon is coming up, which means sky viewing is going to be terrible. And also my computer is still a furnace slash jet engine.

Happens every month.

I know! And next month, it will affect a big meteor shower.

Oh, that’s not good. I’m sorry to hear it. How about some amazing science to take your mind off it?

Sounds good. What have you got?

Eavesdropping on aliens, machine learning, dark matter equations, and swirling electrons.

Nice. I also have news on some grants awarded for cool, new technology.

Fantastic.

All of this and more, right here on the Daily Space.

I am your host Dr. Pamela Gay.

I am your host Erik Madaus.

And we’re here to put science in your brain.

Sometimes a paper crosses my screen that is science at its most delightful. Today, I get to present one of those papers. In a paper accepted into The Astronomical Journal, a team of graduate students from Penn State University describes how it just might be possible to eavesdrop on alien communications.

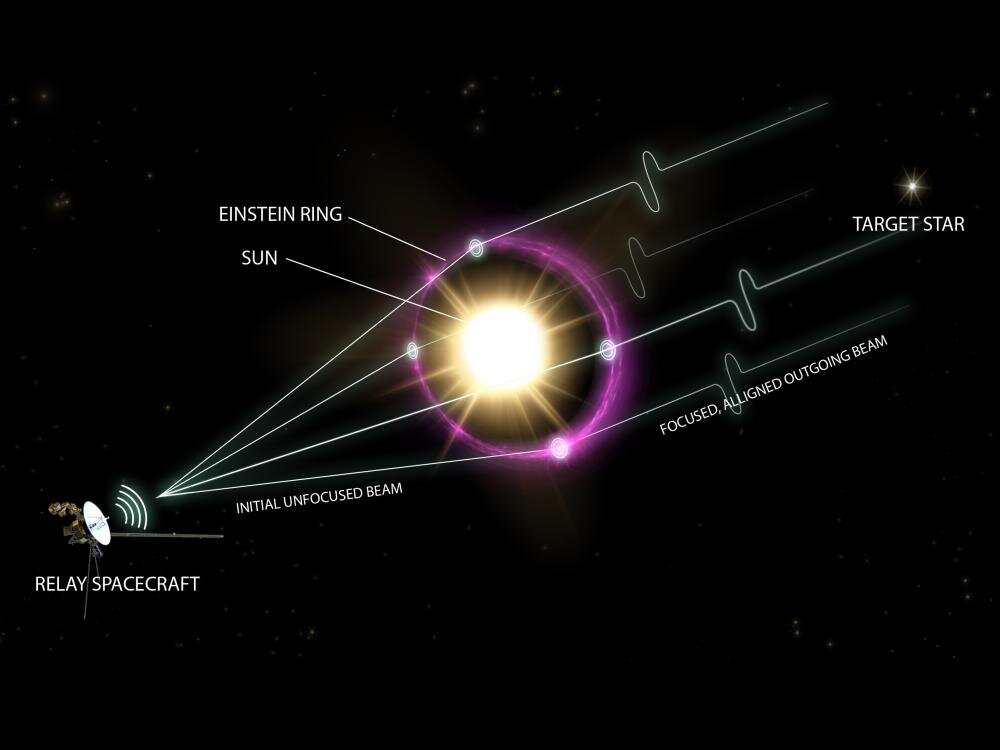

Like so many, this team finds the secret sauce for their science in relativity. Any large enough mass has the ability to bend light with its gravity, and our Sun is a large enough mass. In theory, it should be possible to use our Sun’s gravity like a cheap magnifying glass to focus light – including communications signals – onto a relay spacecraft that can beam those signals back to our planet Earth.

This technology was worked out as part of a graduate course at Penn State taught by Jason Wright. In addition to pointing out how this technique can be used to eavesdrop on distant communications, this team also discusses how it can purposely be used to allow humans to communicate across great distances one day. As Wright explains: Astronomers have considered taking advantage of gravitational lensing as a way to essentially build a giant telescope to look at planets around other stars. It has also been considered as a way that humans might communicate with our own probes if we ever sent them to another star. If an extraterrestrial technological species were to use our sun as a lens for interstellar communication efforts, we should be able to detect those communications if we look in the right place.

We can even imagine a future where star systems with compact objects are one day used as hubs that gather information from one direction and send them off in another. Student Nick Tusay explains: Humans use networks to communicate across the world all the time. When you use a cell phone, the electromagnetic waves are transmitted to the nearest cellular tower, which connects to the next tower, and so on. TV, radio, and internet signals also take advantage of network communication systems, which have many advantages over point-to-point communications. On an interstellar scale, it makes sense to use stars as lenses, and we can infer where probes would need to be located in order to use them.

This is very much future tech that is beyond what we can do today, but solidly in the category of things sci-fi writers should most definitely be including in their stories. To focus a source into an Einstein ring, a space probe would need to be placed 550 AU from the Sun. The Voyager missions are about 160 and 130 AU from the Sun. Right now, we just don’t have propulsion systems or spacecraft power systems capable of getting a relay mission out where it needs to go in a reasonable amount of time or to power it enough to beam high bandwidth data back to us.

It’s still cool though, and I delight at the chance to eventually read a book where the first researchers use this kind of tech to capture some truly alien entertainment.

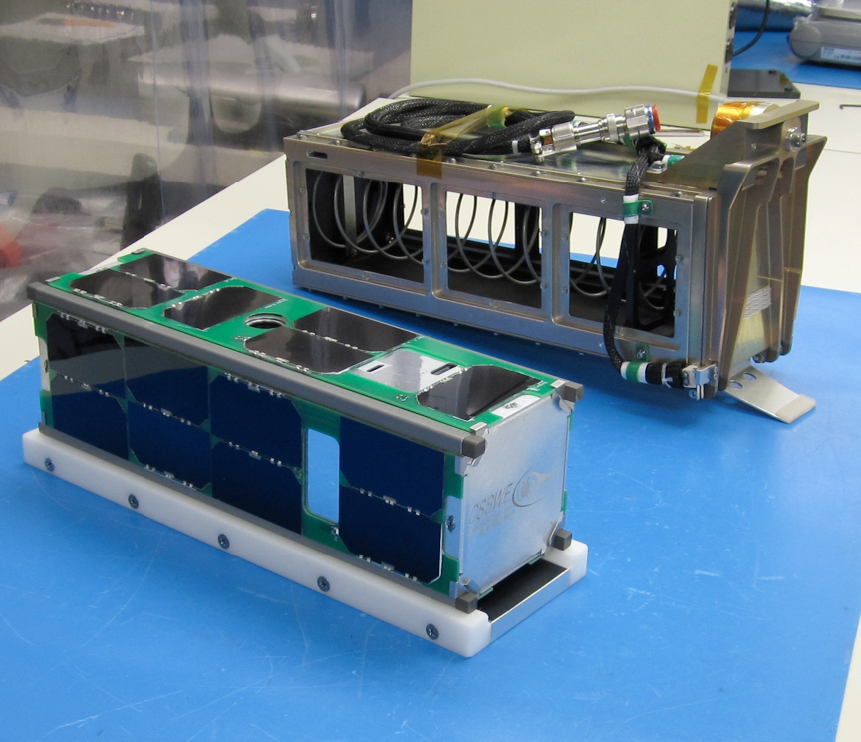

CubeSats are becoming regular parts of science collecting, not just experiments as they were treated initially. The problem with these satellites is the sheer number of spacecraft generates so much data, and only a fraction can be sent back.

Researchers at Carnegie Mellon University want to implement a new system where the constellation could do some of its own processing in orbit using machine learning and only send back the most important data to the ground. They call this system “orbital edge computing”. One suggested application for this technology is imaging constellations. One example provided by PI Brandon Lucia is spotting wildfires before they get big enough to be a problem.

A new grant from the National Science Foundation (NSF) will allow the team to fund lots of grad students to work on the project and build some spacecraft prototypes for launch in a few years. Congratulations to the team at Carnegie Mellon. We look forward to hearing more about this new technology.

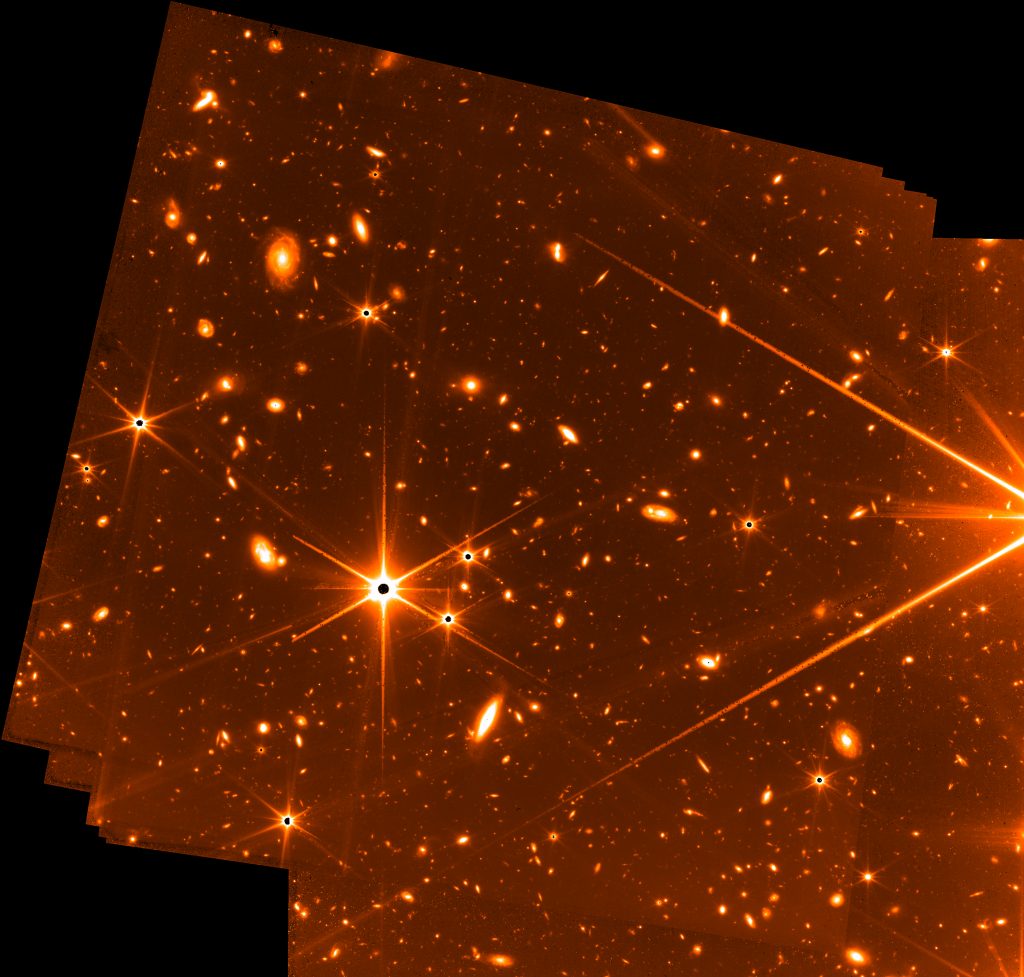

After a solid decade of delays, the folks at NASA are more than a little excited about the data coming out of the JWST. The first fully processed science data is slated to be released on July 12 at 10 am Eastern. We will be doing a watch party on our Twitch channel, CosmoQuestX.

While most folks are keeping everything hush-hush, there are some – I assume authorized – leaks, including a new image from JWST’s fine guidance sensor. A newly released image was built from the guide camera’s data as the camera worked to verify the telescope stayed on target. It is unclear exactly what JWST was studying, but it spent 32 hours pointed such that its fine guidance camera captured the sky near the star 2MASS 16235798+2826079. Based on how the released image appears to be mosaiced together with slightly different pointings, I am going to predict that JWST took a series of images of something that we’ll see on July 12.

The remarkable thing here is this image from the guide camera is now the deepest image of the universe that has ever been taken. It’s deeper than the Hubble Ultra-Deep Field, and while it contains a few stars, it is mostly galaxies — and mostly ancient galaxies shining faintly from the early universe. This is like taking family portraits with your car’s backup camera; you can, but that camera really isn’t designed to take great photos. JWST is the high-res camera you want to be using, and with rumors of scientists just breaking into tears the first time they see JWST’s images, we can only imagine that the images are better than anything we can imagine.

Soon we will know what this telescope has to show.

Until then, we have more science from software and theory.

As Erik mentioned earlier, one of the biggest problems we have with all these spacecraft is receiving all of the data they send back to Earth. And once you get all that data into your local systems, people have to process and analyze everything to come up with answers and solutions.

That’s my vague way of saying there is a lot of work to be done, and until the last decade or so, the work has been done by undergraduate and graduate students the world over. But in this last decade, we have seen a rise in machine learning usage, which means less time eyeballing all the data by hand, and more time coding and training algorithms to do the analysis for us.

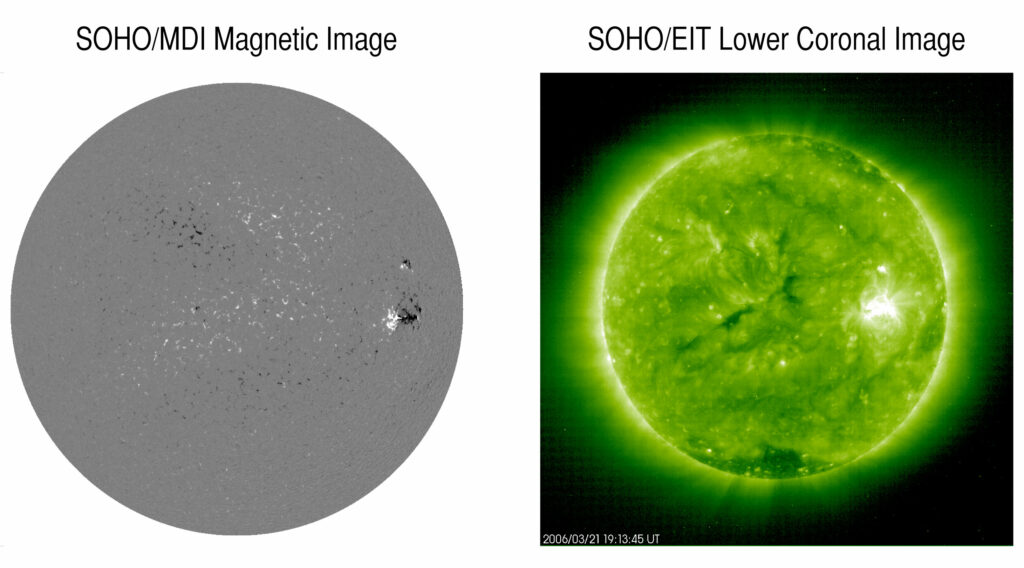

Now, scientists at the Southwest Research Institute have developed a machine-learning algorithm that can process data being returned from solar missions. In a new paper published in Nature Astronomy with lead author Subhamoy Chatterjee, the team details their findings while working with convolutional neural networks (CNNs). Chatterjee explains: New research shows how convolutional neural networks, trained on crudely labeled astronomical videos, can be leveraged to improve the quality and breadth of data labeling and reduce the need for human intervention.

Specifically, the team trained their algorithm on videos of the solar magnetic field. The goal was to identify where strong and complex fields emerged on the solar surface as these are the main precursors ahead of space weather events such as coronal mass ejections. Co-author Andrés Muñoz-Jaramillo explains: We trained convolutional neural networks using crude labels, manually verifying only our disagreements with the machine. We then retrained the algorithm with the corrected data and repeated this process until we were all in agreement. While flux emergence labeling is typically done manually, this iterative interaction between the human and machine learning algorithm reduces manual verification by 50%.

Additionally, they masked the videos bit by bit until the algorithm changed the classification, finding the moment it could detect the magnetic field shift and leading to a more useful database of detections. This is a good thing. We need to get better at predicting these events to protect Earth systems from solar interference and damage.

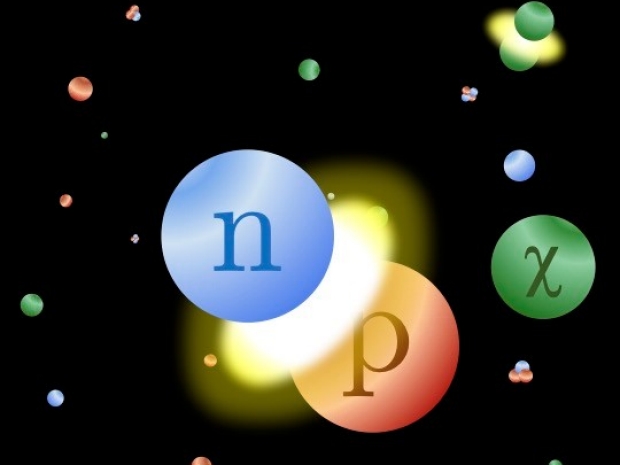

The bulk of our universe’s mass is in the form of some weird matter that can’t be seen or found using any of our normal methods that rely on interactions with the electromagnetic force. It can currently only be found through how its gravitational mass pulls on surrounding objects and bends the passage of light.

Trying to figure out what dark matter is made of is super hard and has researchers doing everything from slamming protons together at high energies to looking for tiny flashes of light from dark matter particles colliding with liquid in giant underground detectors, to even doing more math for graduate projects than I have done in my entire life.

And I have done a lot of math.

Theorists at New York University have been working on detailed models that describe how the presence of different kinds of dark matter particles would have influenced the evolution of our universe. In a new paper published in Physical Review Letters, researchers led by Cara Giovanetti demonstrate that certain kinds of dark matter particles would have affected the formation of elements during Big Bang nucleosynthesis and even left signs in the cosmic microwave background.

According to Giovanetti: Precision measurements of different parameters of the universe — for example, the amount of helium in the universe, or the temperatures of different particles in the early universe — can also teach us a lot about dark matter. Lighter forms of dark matter might make the universe expand so fast that these elements don’t have a chance to form. We learn from our analysis that some models of dark matter can’t have a mass that’s too small, otherwise, the universe would look different from the one we observe.

While this research doesn’t tell us what dark matter is, it does tell us what it isn’t, and it reminds us that the cosmic microwave background is the great test case against which theories for things small and large all must be compared.

Speaking of small things, and I mean extremely small things, scientists have been doing wild experiments to see how electrons flow. Generally, electrons don’t flow like water, with pretty streams and waves and whirlpools and evidence of classic fluid dynamics. The electrical current behavior is more independent, with electrons acting as individuals rather than groups.

Of course, scientists want to see if that is always true, and it turns out that using certain materials and specific conditions, you can make electrons flow like water. In a new paper published in the journal Nature, scientists have observed electrons flowing in vortices – whirlpools – while being squeezed through ultra-clean materials at near absolute zero.

In the experiment, the team used tungsten di telluride, which has been found to be ultra-clean and exhibits exotic electronic properties under certain conditions. Co-author Leonid Levitov explains: Tungsten ditelluride is one of the new quantum materials where electrons are strongly interacting and behave as quantum waves rather than particles. In addition, the material is very clean, which makes the fluid-like behavior directly accessible.

Just to clarify – clean here describes how the interactions work out, not the actual grime on the material.

Using pure, single crystals of the metal and some electron-beam lithography and plasma etching, they patterned flakes of the tungsten ditelluride with a center channel. On either side of the channel, they etched circular chambers. They repeated the process with gold flakes as well for comparison. Then they ran a current through these patterned samples at 4.5 Kelvin. The electron current was measured using a nanoscale SQUID – a superconducting quantum interference device – which measures magnetic fields to a high degree of precision. And all of this delicate work allowed the team to observe just how the electrons flowed through those channels in the two materials.

The electrons in the gold didn’t reverse direction at all, even in the circular chambers; however, the electrons in the tungsten ditelluride not only reversed directions but actually began to flow around the side chambers. They created small whirlpools before flowing back out into the straight channel. That’s pretty amazing, and as Levitov notes: That is a very striking thing, and it is the same physics as that in ordinary fluids, but happening with electrons on the nanoscale. That’s a clear signature of electrons being in a fluid-like regime.

If you’re asking why in the world would anyone want to do this or even think to do this, the answer is science, first off. But second, engineers are always looking to improve designs and use less power, and this could help them make more efficient devices. Pretty neat stuff.

And now, Erik returns to talk about What’s Up in our night sky.

What’s Up

Looking forward to the week ahead, there are lots of things to see in the night sky. The Full Moon will be on July 13. It will also be at perigee, making it a few percent bigger in angular size than at other times. This full moon will be the biggest one in 2022. Tides will also be bigger, which would help raise any stuck ships as it did for the Ever Given.

2021 seems so long ago.

Later, on the 15th, Saturn will be within four degrees of the Moon.

This month’s full moon won’t interfere with too many things besides the usual, but next month? Oh, boy. Next month is the Perseids meteor shower, and this year, that shower peaks within two days of the full moon, potentially washing them out.

At least the gas giants will finally be in a good position to view, with Saturn at opposition on August 14.

You can see many things in the night sky with a telescope, either through the eyepiece or with a digital sensor. There are some things you can’t see, like gravitational waves. To image those you need special telescopes. There are currently two telescopes capable of doing this, both in the United States. One key problem is that it is hard to correlate the gravitational events with the release of optical wavelength light, which is important to study the events. This has been done but only once, and it took hours after the gravitational waves were released.

A team from the University of Warwick in the U.K. and Monash University in Australia are trying to solve this problem and bring the detection time down to minutes. They are building two new pairs of telescopes on opposite sides of the world. These telescopes have the glorious backronym GOTO, meaning Gravitational Wave Optical Transient Observer. For why this is funny, GoTo is the generic name for systems on small commercial telescopes that automatically find things for their lay users.

Each site, one on the Canary Islands and one at Siding Spring Observatory, will have sixteen forty-centimeter telescopes capable of viewing the entire sky in a few days. The telescopes on the Canary Island site are already set up. With a new £3.2 million pound funding, the team can finally build the second telescope set up in Australia, in time for the next LIGO observations in 2023.

As always, we look forward to hearing more about these new observatories and their results.

This has been the Daily Space.

You can find more information on all our stories, including images, at DailySpace.org. As always, we’re here thanks to the donations of people like you. If you like our content, please consider joining our Patreon at Patreon.com/CosmoQuestX.

Credits

Written by Pamela Gay, Beth Johnson, Erik Madaus, and Gordon Dewis

Hosted by Pamela Gay, Beth Johnson, and Erik Madaus

Audio and Video Editing by Ally Pelphrey

Content Editing by Beth Johnson

Intro and Outro music by Kevin MacLeod, https://incompetech.com/music/

We record most shows live, on Twitch. Follow us today to get alerts when we go live.

We record most shows live, on Twitch. Follow us today to get alerts when we go live.