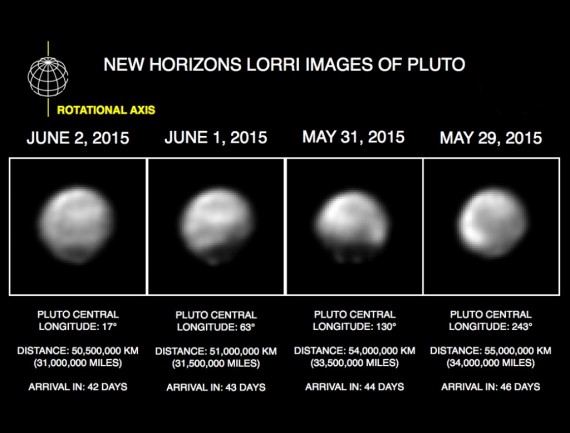

If, like me, you’re following steady stream of images coming back from New Horizons, you may be wondering, why the images kind of look… well… like Pluto is more Vesta-shaped than Ceres-shaped.

When the images appear in the news, like in this compilation appearing on Forbes, you’re seeing images the New Horizons team has processed within a pixel of their lives.

While there is likely some truth in the color variations of these images (Yes, Pluto likely has albedo variations on it’s surface) the truth is, any details you try to map are going to be about as accurate as Percival Lowell’s maps of Mars.

2015-06-11

05:27:45 UTC

Exp: 100 msec

Target: PLUTO

Range: 39.6M km

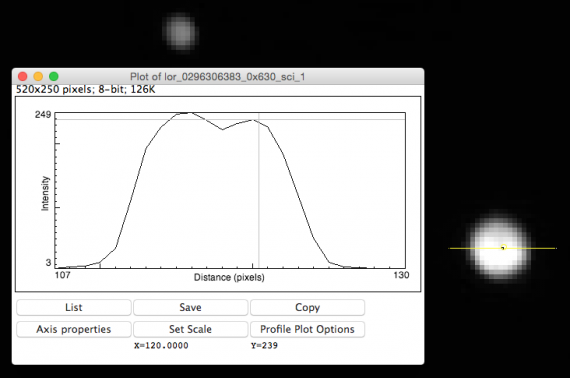

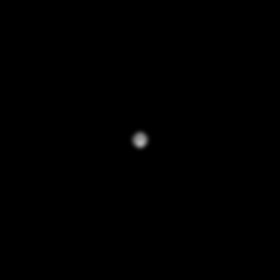

If you go to the New Horizon’s site, you can get you hands hard drives on the raw images. The latest image (without saturation) is at right. I opened this image up in the stupidly easy to use (and really fast to load up) Salsa-J image software. A profile of the Pluto light blob is shown below. Based on that image, Pluto’s profile is a whooping 17 pixels (give or take) across. This means that the good folks (and I mean that) at New Horizons have worked magic to expand 17 pixels of information into 170 pixels of information. This kind of magic is called deconvolution, and it’s the same magic that real police use to enhance images, and that pretend police on crime dramas use to turn someone’s reflection in eyeglasses into a stunning image that could be an Instagram profile photo.

Pluto’s light profile.

So, back in the real-world, deconvolution is less than perfect, but there is software out there that can make it easy. I’m not exactly sure what the New Horizon’s team is doing (and I’ve asked them for the full rundown, but they’re a bit busy at the moment), whatever it is, it is magic.

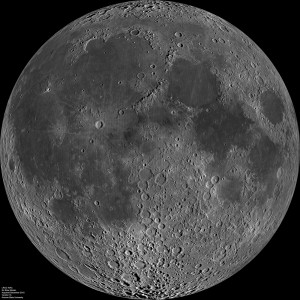

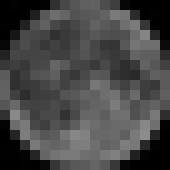

Below is the image I’m going to start with. It is a mosaic of LROC/WAC images and is pretty [expletive] high-res. The image I’m starting with is just a “small” 1400×1400 version that is on NASA. Isn’t it shiny? Now let’s consider what happens when we make this image just 17pixels across. Using Photoshop, I first made the image 17pix by 17pix, and then I took into account that the LORRI camera has a Point Spread Function (this is how the light is smeared by the optics) of 1.8-2.4 pixels along rows and 2.5-3.1 pixels along columns. It’s not easy to introduce asymmetric smearing with Photoshop (and I’m feeling too lazy to open IRAF), so I applied an optimistic Gaussian smoothing of 2.4 pixels(real PSFs aren’t actually Gaussian) and then I adjusted the levels so the brightest grey point became white. We have accomplished Pluto-like image of the Moon! (Caveat: The point at which I smeared the image may not have been entirely right)

LROC WAC image of the Moon

Same image, 17x17pix

With 2.4px Guassian

With white point adjusted

The moon reduced to Pluto image scale.

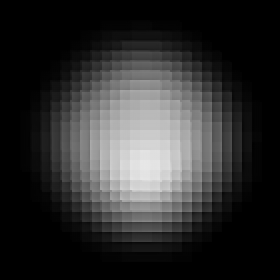

My failure to resurrect the moon.

So, with the Moon reduced to how New Horizons sees Pluto, lets see what we can do with photoshop. It turns out… NADA. I took that fuzzy blob and I blew that fuzzy blob up by a factor of 10 and tried to deconvolve it using Photoshops Smart Sharpen (which uses a deconvolution algorithm). Even knowing exactly what kind of blur the image had, I couldn’t recover anything fabulous. What I got… is that last image.

Moral? Don’t start to believe that Pluto is anything other than round (but leave space for it to be really busted up, because we don’t actually know). Don’t fixate on what those patterns we see are, because they are just hints and a complex reality. Oh – and image deconvolution is really really hard (and I *really* want to know how they did it).

Join the Crew!

Join the Crew!

Escape Velocity Space News

Escape Velocity Space News

I’m sure I see canals

I could be wrong but I assumed they had multiple images and were stacking them. A single 17×17 pixel image isn’t much to work with but if you have a dozen or so of them you can run them through photo stacking software like lynkos or deepskystacker to interpolate the data and come up with much better images.

Hi Will, I’ve found out that they were using a small handful of images and will be amending this post.

Thank you for this wonderful explanation!!! I was wondering why we were getting these.

You would have to deconvolve before upsampling, not the other way ’round

This is a well written and technically accurate article, and I thank you for that! The problem, however, is not about pixellation. The problem is with human expectation. I have been waiting 9.5 years for the completion of the New Horizons mission, and have no problem waiting another few weeks for “real” empirical data. The problems are sociological: instant gratification and first-published article syndrome, which is also financially motivated.